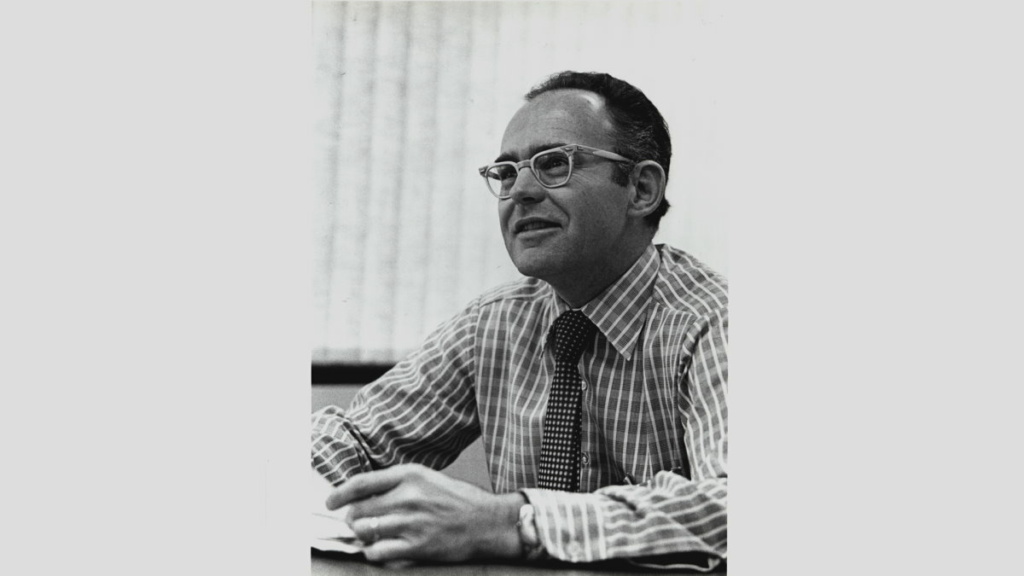

Gordon Moore (1929-2023) saw the future – but did he also create it?

It took decades for Gordon Moore to be able to speak with a straight face of his eponymous Moore’s Law. “I couldn’t even utter the term for a long time. It just didn’t seem appropriate. But as it became something that almost drove the semiconductor industry rather than just recording its progress, I became more relaxed about the term,” Moore said in a special issue of IEEE Spectrum celebrating the 50th anniversary of his famous prediction.

This quote reveals how Moore himself thought about ‘his’ law: as a prediction that turned into a self-fulfilling prophecy. Tracking all sorts of parameters about integrated circuits that the budding semiconductor industry started churning out in the early 1960s, Moore – at the time still at Fairchild Semiconductor – noticed one of them produced a nice straight line in a logarithmic plot, showing that the number of components per chip had doubled every year. Extrapolating those four data points, he predicted in the 1965 Electronics article that the rate of improvement will “lead to such wonders as home computers or at least terminals connected to a central computer, automatic controls for automobiles and personal portable communications equipment.”

As famous as the article is today, at the time it didn’t make many waves. Often Caltech researcher Carver Mead is credited for getting Moore’s Law going – and not only because he was the one who coined the term. Mead often took Moore’s latest plots on the road with him when visiting other academics, electronics companies and the military, becoming a sort of evangelist for the concept. Additionally, he gave it credibility by showing that the miniaturization of transistors made them better, not worse as was commonly assumed.

As more data points landed in the expected places, the industry’s faith in exponential IC growth solidified. And then, at some point, the prophecy turned into a dictate setting a target pace. “Moore’s Law is really about people’s belief in the future and their willingness to put energy into causing that thing to come about,” Mead said. “More than anything, once something like this gets established, it becomes more or less a self-fulfilling prophecy,” Moore agreed.

Most people in the industry will agree, in fact. Still, there’s another take on Moore’s Law. And since that comes with yet another very reassuring prediction about the future, it feels right to honor Moore’s recent passing by discussing it.

Crawled to a halt

The different perspective is largely the work of Walden “Wally” Rhines. The former Mentor Graphics CEO has argued at many a symposium that the remarkably consistent chip-scaling pace over the decades isn’t because of a wild extrapolation from four data points but rather the result of a phenomenon seen in most manufacturing industries: the learning curve. As companies gain experience in the production of a product, so increases their efficiency in the use of inputs such as labor and raw materials, thereby lowering the cost per unit of output. That’s no different, says Rhines, for transistors.

Rhines has the data to back up his thesis. The cost of any type of computing switch plotted against the total accumulated volume of switches is a straight line in a double logarithmic graph. So going from vacuum tubes to discrete transistors to integrated circuits, the cost per switch (adjusted for inflation) has always decreased by a fixed percentage.

“Think of the learning curve as sitting one rung above Moore’s Law in the taxonomy of theories. The data suggest that the learning curve is a universal phenomenon, as near a law of nature as exists in the world of technology manufacturing and indeed anywhere humans engage in a repeated task. Stated plainly, the learning curve doesn’t care how we achieve the reduction in cost per switch, only that we inevitably do since we always have,” Rhines wrote in a guest blog in Scientific American.

The implication is that Moore’s Law, rather than being a self-fulfilling prophecy, is actually somehow contained within science and technology itself. In solid-state technology, but also solar cells, batteries, DNA sequencing and many other sectors. In a way, Moore’s Law was inevitable, although the pace might depend on the society it was born into. But aliens in a faraway galaxy or Soviet engineers completely shielded from the West would end up reducing cost exponentially, too.

The cheery consequence of this perspective is that computing technology will keep improving long after Moore’s Law has finally crawled to a halt. “Moore’s Law will indeed become irrelevant to the semiconductor industry. But the remarkable progress in reducing the cost per bit, and cost per switch, will continue indefinitely, thanks to the learning curve,” Rhines wrote. Surely Gordon Moore would have appreciated that.

Main picture credit: Intel