Context, systems can’t do without it

The past eight months have been interesting in our world of computer science and software engineering. For all of us in general, but also for the teams I’m working with. Recently, I wrote a bit about Unified Namespaces for industrial automation, which is something we’re spending a lot of time and effort on, but in the background, there are also the seemingly revolutionary developments in the world of artificial intelligence. Suddenly, everybody seems to be on top of data again, and AI is going to do all the impossible stuff with it that we’ve been unable to do for years.

So, are we going to be out of a job soon? Or are we going to be the front-runners making it happen? For the time being, we’re neither because there’s no need. Artificial intelligence has already been around for decades and although developments have been speeding up a lot in recent years, there’s not much to be afraid of as far as I’m concerned. On the contrary.

Boring drivel

Just like developments that came before it, starting with the printing press and the first industrial revolution, artificial intelligence is going to help us get rid of the next batch of repetitive work. That’s what it’s already been doing in some areas – what AI algorithms for image detection do isn’t so different from the manual image processing activities we’ve been using since the 1980s, except that they do it on their own, and slightly faster than a human selecting the processing steps by hand.

At the same time, it’s also becoming clear that writers, who were the first to fear for their jobs when ChatGPT showed up, don’t have to be afraid yet. The branch of AI that underlies ChatGPT – large language models (LLMs) – can do a lot of things with language, but it lacks something that human writers have. ChatGPT produces texts based on what it gathers from the historical data it was trained on. Not just based on the content but also the language structures. However, being a piece of technology, an algorithm, it has a hard time capturing emotions in the way humans do. It can’t easily judge whether it should touch somebody by making them sad, happy or angry. With clever prompting (prompt engineering appeared to be a new job description for a few months), it may come close to what you’d expect, but it can’t consistently implement the real emotional touch of a human writer. And without that clever prompting, the output becomes repetitive, boring drivel, as somebody wrote in an article on using AI for commercial text creation a few weeks ago.

Engineers can’t come up with fitting solutions without knowing the context in which those solutions are to be used, and the same is true for AI algorithms. Take our work on Unified Namespaces (UNS): if we don’t know in what context we’re going to apply the UNS, we won’t be able to select the right technologies to implement it. Neither will an algorithm that’s being asked a similar question. Without context, both the engineer and the algorithm will generally come up with solutions that don’t fit.

We need humans

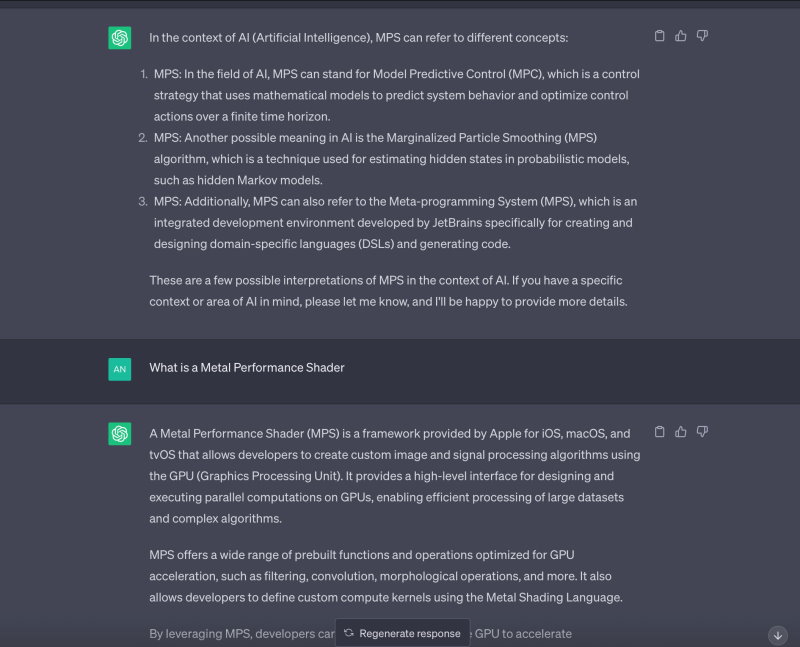

A good case in point was given by Capgemini’s Robert Engels in a Medium article. ChatGPT as it was released last November was based on OpenAI’s LLM GPT-3. GPT-3 itself could answer questions in a similar way as ChatGPT, but it was proven to make context-related mistakes. The example Engels gave is that GPT-3 would easily become racist in its responses because it wasn’t given the context of how to act in a civil conversation. With ChatGPT, OpenAI put a context layer around its GPT models, detecting unwanted outcomes and thus letting the model function better in response to the real world.

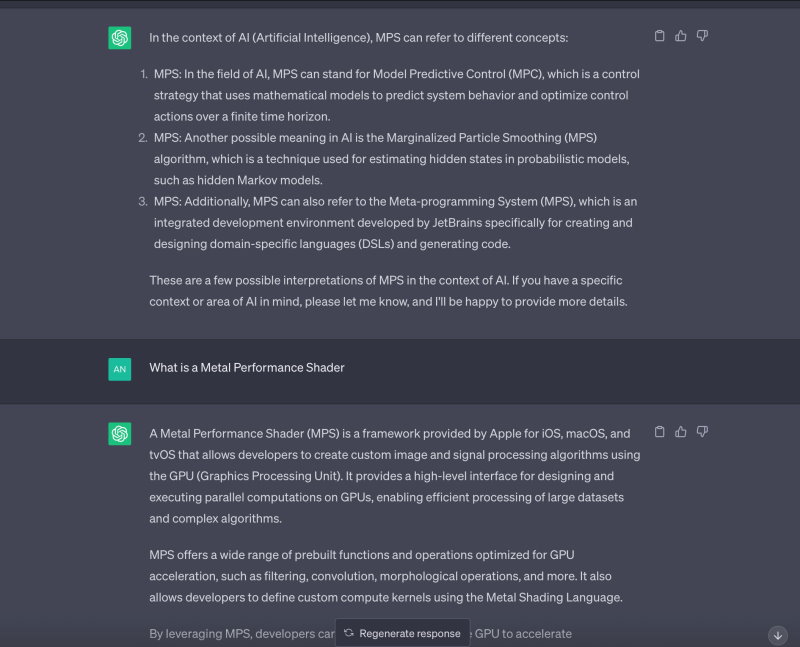

Using the same layer, we can give the model some context to guide it toward an acceptable outcome – this is where ‘prompt engineering’ plays a role. However, even with such a layer, models or algorithms aren’t yet at the point where they can really replace a human in any given area. In software coding, ChatGPT and its quickly growing number of competitors and alternatives still make mistakes. In writing, the models still fail to show emotion. Similar reasoning undoubtedly applies to other domains.

We still need to provide context, and as long as the models remain unaware of the world around them, we need humans to provide that context. So, instead of being afraid, we should be curious about what the future will bring. I’m also curious to hear your opinion about how this is going to develop. Feel free to contact me.